最近在关注房产信息,天天刷网页或者关注经纪人朋友圈真的是一件极其耗费心神的事情,趁着周末想着写个爬虫自动完成这件事情。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 # ErShouFang.py # Ganji.com 58.com 二手房爬虫 # Ande.Studio (c) 2019-08-11 import requests from lxml import etree import time cookies = { } headers = { 'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64; rv:68.0) Gecko/20100101 Firefox/68.0', } def GanjiErShouFang(url): response = requests.post(url, headers=headers, cookies=cookies) html = response.text html = etree.HTML(html) flist = html.xpath('//div[@class="f-list-item ershoufang-list"]')#房屋信息列表 for f in flist: title = f.xpath('.//dd[@class="dd-item title"]/a/text()')[0]#标题 href = f.xpath('.//dd[@class="dd-item title"]/a/@href')[0]#链接地址 layout = f.xpath('.//dd[@class="dd-item size"]/span/text()')[0]#布局 size = f.xpath('.//dd[@class="dd-item size"]/span/text()')[1]#面积大小 toward = f.xpath('.//dd[@class="dd-item size"]/span/text()')[2]#朝向 floor = f.xpath('.//dd[@class="dd-item size"]/span/text()')[3]#楼层 address = f.xpath('.//dd[@class="dd-item address"]/span[@class="area"]/a/text()')[0]#所在地址 area = f.xpath('.//dd[@class="dd-item address"]//span[@class="address-eara"]/text()')[0]#小区 organization = f.xpath('.//dd[@class="dd-item address"]/span[@class="area"]/text()')[3]#发布组织 organization = organization.replace(" ", '').replace("-", '').replace("\n", '') manager = f.xpath('.//dd[@class="dd-item address"]//span[@class="address-eara"]/text()')[1]#经纪人 price = f.xpath('.//dd[@class="dd-item info"]/div[@class="price"]/span[@class="num"]/text()')[0]#房屋总价 average = f.xpath('.//dd[@class="dd-item info"]/div[@class="time"]/text()')[0]#房屋单价 average = average.replace("元/㎡", '') print(title) print("#"*50) try: nextpage = html.xpath('//div[@class="pageBox"]//a[@class="next"]/@href')[0]#下一页 time.sleep(3)#休息3秒,以防过频繁导致机器人验证 GanjiErShouFang(nextpage) except: pass def WubaErShouFang(url): response = requests.get(url, headers=headers)#post will get nothing, get is ok! html = response.text html = etree.HTML(html) flist = html.xpath('//ul[@class="house-list-wrap"]/li')#房屋信息列表 for f in flist: title = f.xpath('.//h2[@class="title"]/a/text()')[0] href = f.xpath('.//h2[@class="title"]/a/@href')[0]#链接地址 layout = f.xpath('.//p[@class="baseinfo"]/span/text()')[0]#布局 size = f.xpath('.//p[@class="baseinfo"]/span/text()')[1]#面积大小 toward = f.xpath('.//p[@class="baseinfo"]/span/text()')[2]#朝向 floor = f.xpath('.//p[@class="baseinfo"]/span/text()')[3]#楼层 address = f.xpath('.//p[@class="baseinfo"]//a/text()')[1]#所在地址 area = f.xpath('.//p[@class="baseinfo"]//a/text()')[0]#小区 #road = f.xpath('.//p[@class="baseinfo"]//a/text()')[2]# #organization = f.xpath('.//div[@class="jjrinfo"]//span[@class="anxuan-qiye-text"]/text()')[0]#发布组织 #manager = f.xpath('.//div[@class="jjrinfo"]//span[@class="jjrname-outer"]/text()')[0]#经纪人 #当经纪人为个人时, <span class="jjrname-outer anxuan-qiye-text">xxx</span> 故用class定位将会出现意外 organization = f.xpath('.//div[@class="jjrinfo"]//span/text()')[0]#发布组织 manager = f.xpath('.//div[@class="jjrinfo"]//span/text()')[2]#经纪人 price = f.xpath('.//div[@class="price"]/p[@class="sum"]/b/text()')[0]#房屋总价 average = f.xpath('.//div[@class="price"]/p[@class="unit"]/text()')[0]#房屋单价 average = average.replace("元/㎡", '') #time = f.xpath('.//div[@class="time"]/text()')[0]#发布时间 print(title) print("#"*50) try: nextpage = html.xpath('//div[@class="pager"]//a[@class="next"]/@href')[0]#下一页 time.sleep(3)#休息3秒,以防过频繁导致机器人验证 WubaErShouFang( 'https://ganzhou.58.com' + nextpage) except: pass url = 'http://ganzhou.ganji.com/shangyou/ershoufang/' GanjiErShouFang(url) url = 'https://ganzhou.58.com/shangyou/ershoufang/' WubaErShouFang(url)

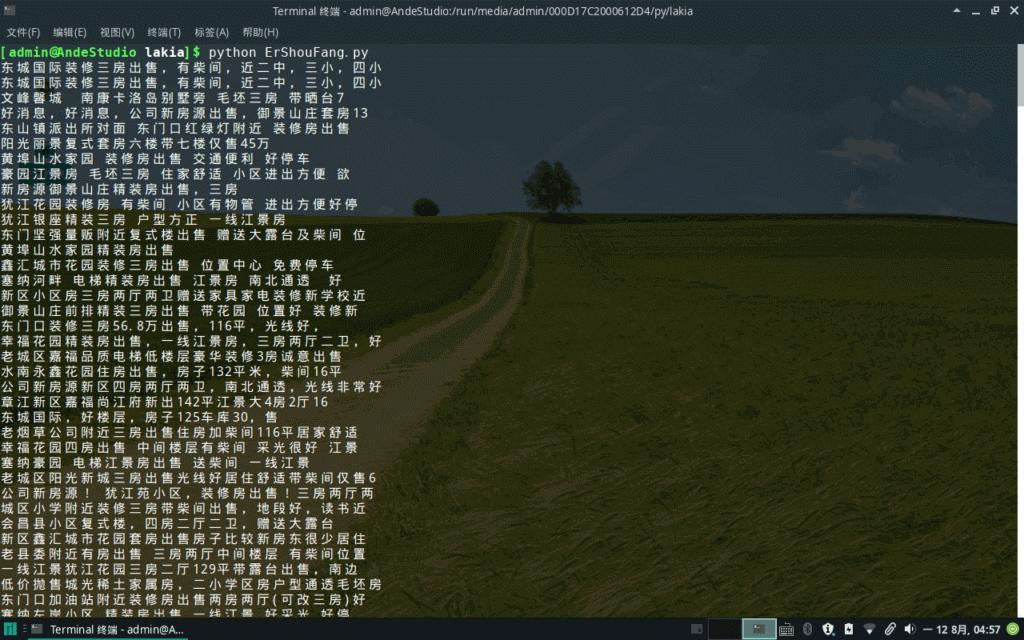

运行结果:

本来想着这是一个很小的项目,杀鸡焉用牛刀,所以放弃了使用Scrapy,自己从头写一个爬虫就够用了,但事情并不是如此简单。写爬虫的目的是为了获得数据从而利于决策,并不是简单输出就了事。首先它得提示我新的房产信息,其次它得在众多信息中计算性价比较高的选择,由此就埋下了两个坑。同时,在编程的过程中,我产了两个新的想法:一是对全国的房产数据进行取样制作房价指数,以利投资分析;二是不局限于房产信息,可以抓取其他数据发挥利用的价值。所以到头来,还是得用到框架。